Program source code as machine learning data

This blog post is part of my project in the Neural Networks course at University of Tartu.

Nowadays machine learning, and neural networks specifically, are used to solve a wide spectrum of tasks. Besides simple real-valued vectors as data, successful techniques and architectures have been developed to also work with images, natural language and audio. As a programmer and a programming languages enthusiast the obvious question is how program source code can be input into a neural network to solve tasks which would benefit us the programmers.

First, I will describe what differentiates source code from the other mentioned types of input. Second, I will explain the path-based representation for inputting code into neural networks. Third, I will give a short summary of code2vec and code2seq, which are based on this representation. Last, I will discuss some of their limitations.

This gives an overview of the following articles:

- A General Path-Based Representation for Predicting Program Properties1,

- Code2Vec: Learning Distributed Representations of Code2,

- code2seq: Generating Sequences from Structured Representations of Code3.

How source code is represented?

Plainly put, source code is just text, so natural language is quite related. The symbols of both make lexical tokens and the token sequences of both contain grammatical structure, which can be represented by syntax trees. So naturally, one could take the token sequence of source code, as given by an existing lexer, and feed it into a recurrent neural network (RNN) just like a token (word) sequence of text.

Natural language processing (NLP) techniques have been developed for decades and are very successful, so this likely isn’t too bad. Unfortunately, it completely ignores the inherent structure of source code. Thus, to be successful, such neural network would have to learn the structure from scratch by example from the training data. Not only may this be inaccurate, it also requires a significant amount of training data and time.

Unlike natural language, where ambiguities are possible, code has very strictly defined structure which is enforced by a parser. Such parsers are fully precise and very fast to extract the structure of code in the form of a syntax tree. Although a similar thing exists in NLP, it’s a whole separate (machine learning) task on its own.

Therefore, instead of requiring a machine learning model to learn the structure of programs, we can just get it using a perfect parser. The result is a syntax tree or, after some additional processing, an abstract syntax tree (AST), which omits certain irrelevant details. ASTs are the fundamental representation of source code used by interpreters, compilers, program analyzers, etc. Hence, it makes sense to use the structure provided as an AST.

Example

For example, consder the following Java method, which checks if a list contains an element:

boolean f(Object target) {

for (Object elem: this.elements) {

if (elem.equals(target)) {

return true;

}

}

return false;

}

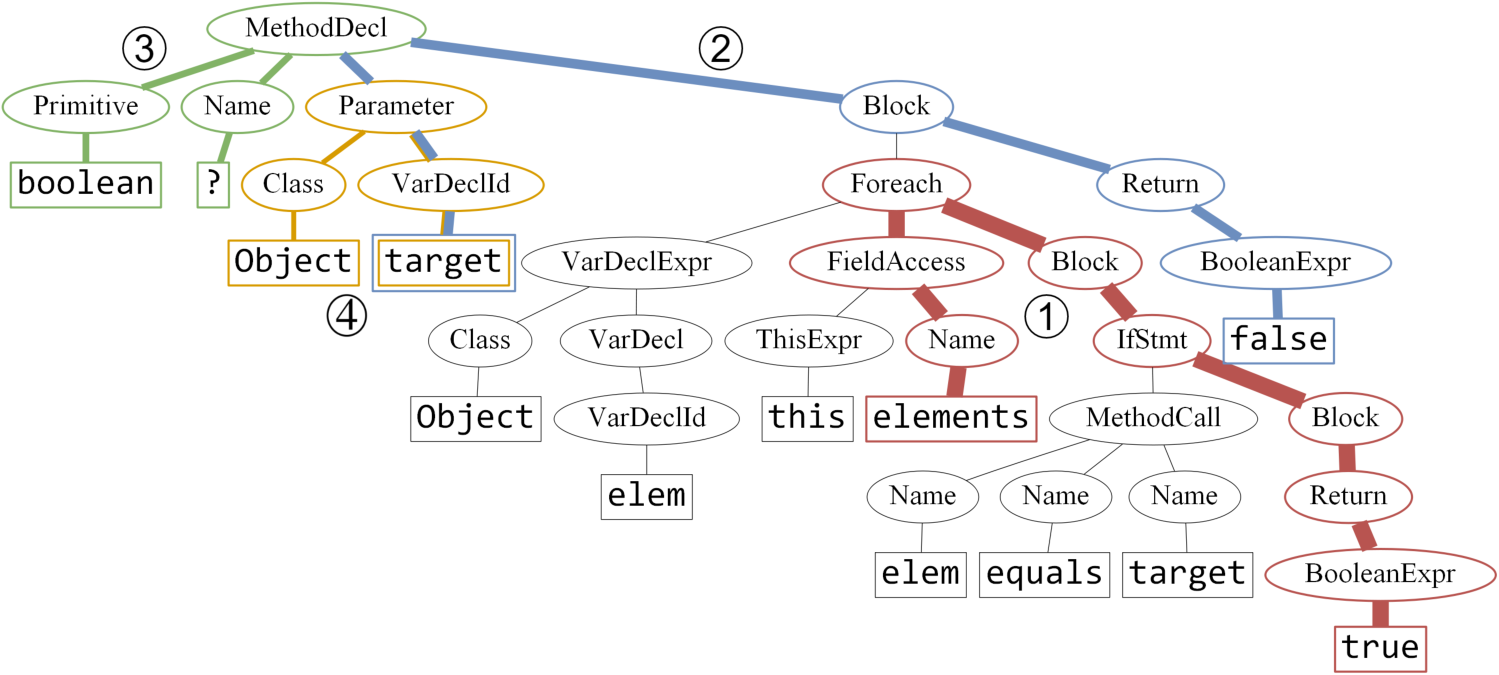

It has the following AST (ignore the colors and numbers for now)2:

How to input trees into neural networks?

Given an AST for a program, the problem is far from solved because mainstream techniques don’t take input in the form of trees. RNNs allow a network to consume linear input of varying length, but trees are nonlinear, have varying size and varying number of children nodes. Although techniques for tree input have been proposed, they won’t be considered here.

Instead, the people behind code2vec suggest the following: don’t input the tree directly, but input paths, which are linear. This works as follows1:

- Pick any two leaves of the AST.

- Represent them by their tokens including their data (e.g. variable name, integer value, etc).

- Trace the unique path which connects them in the AST.

- Represent the path as a sequence of AST intermediate node types delimited by up/down arrows, which indicate whether the path moves up or down the tree.

- Form a path-context triple: (first leaf, connecting path, second leaf).

A single path-context represents only one part of the AST, and by extension only one part of the code. To represent it all, just construct the bag/set of all path-contexts between all pairs of leaves.

Although a path-context can be constructed for every pair of leaves, it may be too inefficient and not really necessary. A limited number of paths (code2vec uses 200) may be randomly sampled or a maximum length limit may be set.

Example

In the previous example, consider the red path connecting the leaves elements and true. Its representation as a path-context is the following triple:

(elements, Name ↑ FieldAccess ↑ Foreach ↓ Block ↓ IfStmt ↓ Block ↓ Return ↓ BooleanExpr, true).

Reading this from left to right while following the arrows, it encodes a notable amount of structural information: elements is a field, which is iterated over using a foreach loop, which checks something for each element and if true, returns true.

This single path-context already captures the key idea of this method. Other also important parts of this AST are captured by the blue, green and yellow paths in the previous figure.

How code2vec works?

Based on the bag of path-contexts representation of source code, code2vec2 does for code what word2vec, etc do for natural language: represent the input as a single dense fixed-size embedding vector. As with word2vec, the goal for the vectors is to somehow capture their semantic properties, such that close vectors correspond to semantically similar inputs, and far vectors to semantically dissimilar ones. These are also known as distributed representations.

As word2vec has proven in NLP, such representations of the input are extremely useful for downstream machine learning tasks, which then don’t have to relearn all the semantic properties themselves. Hence code2vec does this for source code, so that code can be input into the downstream neural network like any other vector.

Training

In order to do this, the neural network part of code2vec takes as input the bag of path-contexts (which are extracted from the source code, as explained above). To train the embedding and have something to optimize, the downstream task of predicting the method name is used. This is apparently one of the most challenging tasks on source code while the method name should capture the semantics of its code.

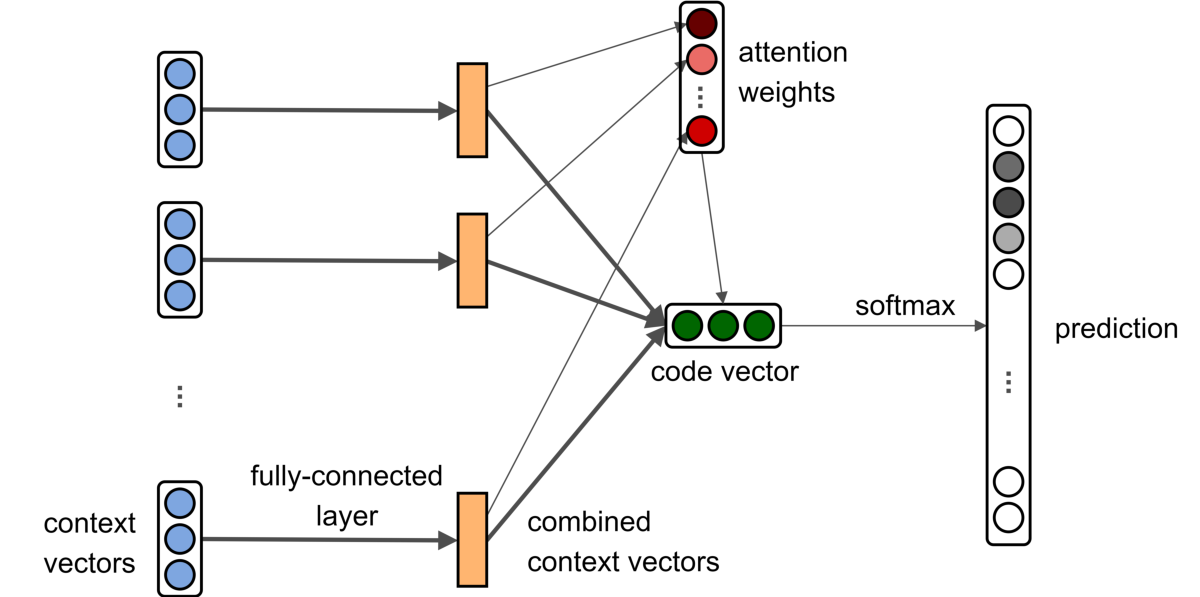

The following neural network is thus trained2:

In order, the layers work as follows:

- Each path-context triple consists of two tokens and a path. A simple embedding maps the tokens to token vectors and a simple embedding maps the entire path to a path vector. These simple embeddings are trained simultaneously with the network. The three vectors are then concatenated into a context vector.

- Each context vector is reduced in dimensionality via a fully-connected layer (with tanh activation) into a combined context vector.

- The bag of combined context vectors is aggregated into a single code vector, which is the desired distributed representation. A global attention weight vector is used to compute a weight for each combined context vector by dot product. This attention weight vector is trained simultaneously with the network. Then the combined context vectors are aggregated into a code vector using the (normalized) attention weights. Attention allows the network to choose, which of the many remotely incoming path-contexts are more important and which less important.

- Finally softmax is used to predict the method name from the code vector.

In the above example program and AST, the latter shows the top four path-contexts by their attention weight:

- red path from

elementstotrue(weight: 0.23), - blue path from

targettofalse(weight: 0.14), - green path from

booleanto?(actual function name is removed for to make prediction non-trivial), (weight: 0.09) - yellow path from

Objecttotarget(weight: 0.07).

Many other path-contexts also exist here but they are already too insignificant according to attention. The use of attention also improves the explainability of the model.

Results

This code2vec network achieves state-of-the-art performance in the method name predicition task. It shows that both the path-context–based representation and the learned distributed representation are very useful for working with source code.

The final softmax layer can be chopped off from the above network to simply get the distributed embeddings for other tasks. It still needs the preprocessing of parsing the code, constructing the AST and extracting the bag of path-contexts from it.

Famously word2vec embeddings have beautiful properties between the semantics of the words and their vectors. Surprisingly, this is also the case for code2vec! For example, adding the embedding vectors for equals and toLowerCase methods gives a vector closest to the embedding of equalsIgnoreCase. Many other similar examples were noticed by the authors and they can be explored on the code2vec website. This empirically proves that the learned distributed embeddings indeed capture some semantic properties of source code, which means that the trained code2vec network should also work well for tasks other than predicting method names.

How code2seq works?

The follow-up work code2seq3 improves upon code2vec in a number of ways. It is still based on the bag of path-contexts extracted from the AST and uses (combined) context vectors in the middle of the network.

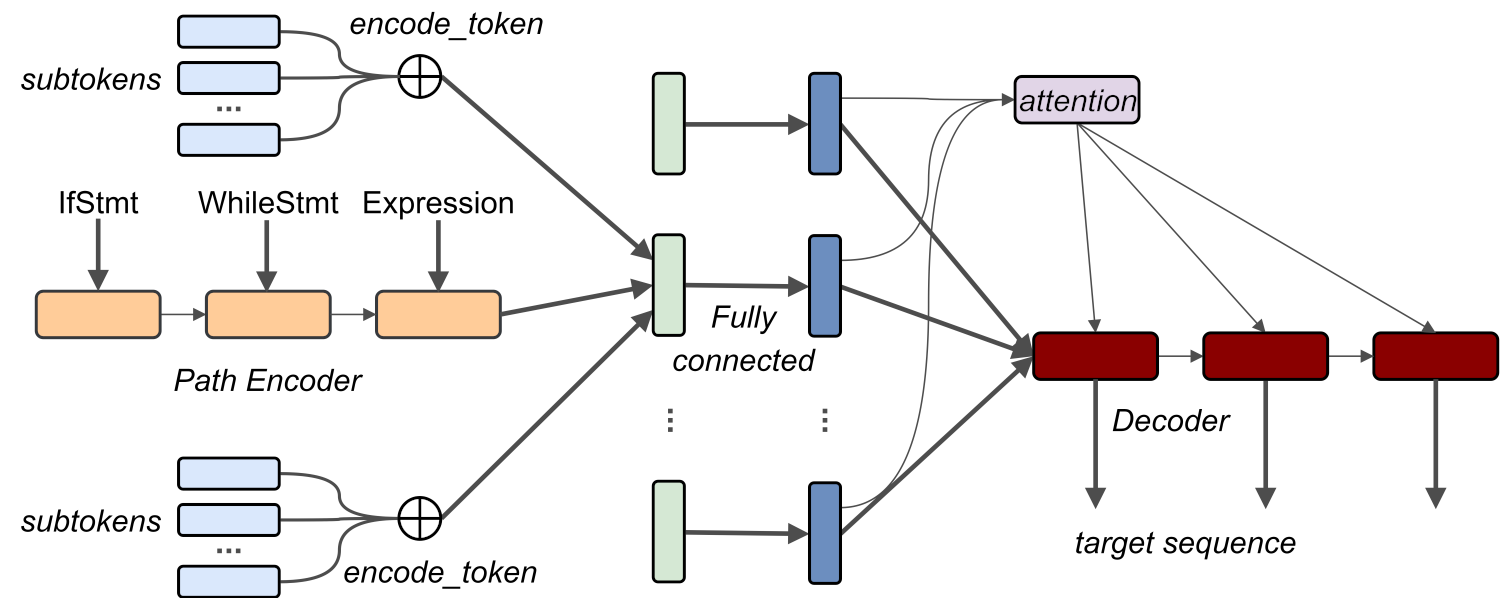

The following neural network is used3:

Differences from code2vec are the following:

- Tokens like

ArrayListare decomposed into subtokens likeArrayandList, which are embedded separately and summed. This allows the network to better exploit naming schemes present in source code. - Paths are not embedded directly and monolithically, but instead using a bidirectional LSTM layer. A simple embedding is used for the individual path components separately. This is highly preferred to embedding the paths of varying-length monolithically because a simple embedding may be quite sparse and not make use of state-of-the-art RNNs.

- No single code vector is constructed, so no distributed representation can be extracted for downstream tasks!

- The network is still trained to predict method names, but now as a sequence of subtokens, e.g.

equals,Ignore,Casefor the nameequalsIgnoreCase. It uses a decoder, which uses attention to attend over all the individual combined context vectors at every step.

How limited are the models?

Both code2vec and code2seq provide their trained models (for Java code) for download. My initial goal was to reuse these (e.g. by extracting distributed representations) and apply them to some other task which has source code as input, for example related to teaching duties of the Laboratory of Software Science. Unfortunately, this turned out to be more complicated than expected.

Firstly, both trained models only handle the source code of a single Java method, not an entire file, which contains a class with likely many methods, among other things. Although a file/class can be split up into methods to separately pass into a model, it would be very limited. The same class may implement all the logic in a single method or have it nicely organized into multiple methods. They would semantically be the same, but comparing a single distributed representation with multiple is not meaningful and loses the semantic properties of the embedding.

This is not a restriction of the path-based representation because the AST of the entire file/class could be used instead. But new models would have to be trained from scratch. Not only that, the training task itself would have to change from method name prediction to maybe class name prediction (which usually doesn’t capture the semantics of all the methods in the class) or something else. And of course the new training task would still need to be such that the learned distributed representations have semantic properties.

Secondly, while code2vec provides distributed representations of code suitable for downstream tasks, the more advanced code2seq architecture and model do not. Rather, the final output is a sequence and the layer before that is just the entire bag of combined context vectors. Therefore, it is possible to combine the best of both worlds:

- Use the first part of code2seq, which uses a RNN for path embedding.

- Use the middle part of code2vec, which aggregates the combined context vectors into a single distributed representation using attention.

Thirdly, there is not enough labelled data available for semantic downstream tasks. Although there is a lot of source code data available on GitHub (code2vec used 32GB, code2seq used 125GB), there are no labels for semantic properties. Both of these models were just trained to predict method names, something which syntactically already exists in the same code. It is more likely, that the distributed representations could be used in unsupervised tasks instead, e.g. detecting similar but not duplicate code by clustering.

How to continue from here?

Path-based representations are a promising generic and practical means of inputting source code into neural networks. Although introduced by code2vec and code2seq, others have started using them as well. Notably, JetBrains Research has developed astminer for extracting path-contexts from various languages (and can be extended further) and used them to build representations of authors’ coding style4.

Instead of an AST, another idea would be to extract such path-contexts from a control-flow graph (CFG). Control-flow of a method is more closely related to the runtime behavior and semantics of it. It would even be possible to inline method calls with CFGs to construct paths spanning across methods, regardless how well the code is structured. Although parsers exist for all programming languages, control-flow graph generators are almost impossible to come by because most languages have complex features, which significantly impact the flow, e.g. exceptions. Moreover, CFGs can only be constructed for executable code like method bodies, but not class fields or type declarations.

-

Uri Alon, Meital Zilberstein, Omer Levy, Eran Yahav. A General Path-Based Representation for Predicting Program Properties. PLDI 2018. URL: https://doi.org/10.1145/3192366.3192412. ↩ ↩2

-

Uri Alon, Meital Zilberstein, Omer Levy, Eran Yahav. Code2Vec: Learning Distributed Representations of Code. POPL 2019. URL: https://doi.org/10.1145/3290353. ↩ ↩2 ↩3 ↩4

-

Uri Alon, Shaked Brody, Omer Levy, Eran Yahav. code2seq: Generating Sequences from Structured Representations of Code. ICLR 2019. URL: https://openreview.net/forum?id=H1gKYo09tX. ↩ ↩2 ↩3

-

Vladimir Kovalenko, Egor Bogomolov, Timofey Bryksin, Alberto Bacchelli. Building Implicit Vector Representations of Individual Coding Style. ICSEW 2020. URL: https://arxiv.org/abs/2002.03997. ↩